Import data by crawling your website

Import data by crawling your website in Productsup.

Productsup can crawl your website to import additional data. It is intended for cases where no data sources are available and when you need to establish a data source.

The Website Crawler begins from a single start domain, then crawls all website pages.

Warning

The Website Crawler feature only supports static, server-rendered sites. It doesn't work with the websites that are rendered dynamically with JavaScript.

Prerequisites

The Website Crawler feature is part of Crawler Module, which is available at an additional cost in all platform Ecrawditions. Contact support@productsup.com to discuss adding it to your organization.

The Crawler Module contains the following features:

Website Crawler

Data Crawler

Image Properties Crawler

Inform your website admins about the upcoming crawler before running this feature. This notice ensures you won’t face restrictions when you start the Website Crawler.

Set up Website Crawler

Go to Data Sources from your site's main menu, and select ADD DATA SOURCE. Then choose crawler and select Add.

(Optional) Give your data source a custom name. This custom name replaces the name of the data source on the Data Sources page. Then, select Continue.

Enter the website name in Domain, for example, beginning with

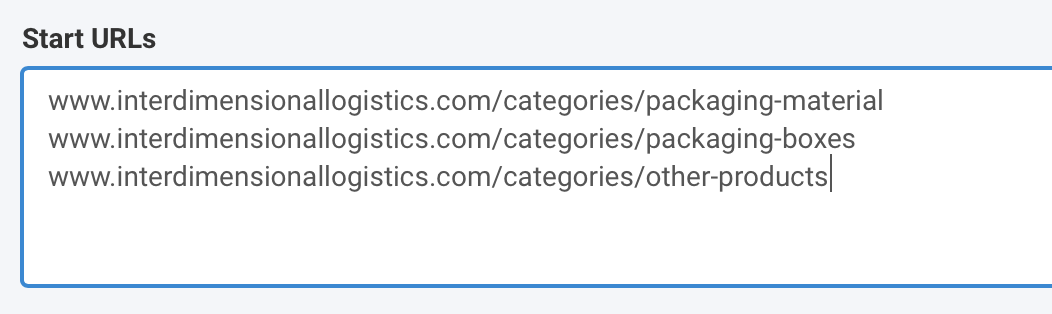

www. The website domain is where Productsup starts crawling data.(Optional) Enter several URLs in Start URLs if product pages or categories don't link to the initial website domain.

Toggle Crawl Subdomains to On if your website has a subdomain. For example,

interdimensionallogisticsis your company name, andshopidentifies your subdomain in your company's shop URL,www.shop.interdimensionallogistics.com.Ensure you have permission from your website admin to crawl the website and check the Permissions checkbox.

Select Save.

Optional Website Crawler advanced settings

Select Use Proxy Server if you want to use your servers to crawl your website.

Enter your proxy website credentials in Proxy Host, Proxy Username and Proxy Password.

To limit crawling product pages only, enter a portion of the URL in Link Contains:

The crawler detects URLs containing this URL portion and only crawls those pages.

You can use wildcards (

*). See Use wildcards.

You can include URLs containing a specific keyword(s) by adding them in Filters (include).

To exclude URLs containing a specific keyword, add it in Filters (exclude).

User Agent is the name the crawler uses to access your website. You can modify the default User Agent according to your needs, for instance, by adding a hash for increased security. See the following examples:

Default:

Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.1.6) Gecko/20070802 SeaMonkey/1.1.4 (productsup.io/crawler)Modified:

Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.1.6) Gecko/20070802 SeaMonkey/1.1.4 (productsup.io/crawler) 8jbks7698sdha123kjnsad9You can select the number of crawlers that crawl simultaneously in Concurrent Crawlers.

Define how many attempts the crawler should attempt to locate unreachable pages under Retry on Error.

In Crawler timeout per page (seconds), set how long the crawler should wait to answer requests before aborting.

You can limit how many pages to crawl in Max count of pages to crawl.

Select Save.

Use wildcards

You can use wildcard characters to save you from having to add precise parameters in Link Contains, Filters (include), and Filters (exclude).

An asterisk (

*) matches any number of characters, no matter the characters.An asterisk (

*) matches any number of random characters.If you input

\*/p/, your URL should end with/p/, for example,http://www.test.com/p/.If you input

/p/\*, your URL should start with/p/, for example,/p/123456.If you input

\*/p/\*, this means you can add/p/anywhere in your URL, for example,http://www.test.com/p/123.You can use as many asterisks as you wish. For example,

\*/cat/\*/p/\*matches:http://www.test.com/cat/123/p/456.A question mark (

?) matches one of any character, no matter the character.You can use single or multiple question marks to match a set number of random characters. For example,

\*/???/\*/p/\*matches:http://www.test.com/cat/123/p/456.